Photo by Sangga Rima Roman Selia on Unsplash

Here is my Log book

Git repository for the functions.

Next objectives for the Lego database project

I think the following features would make for some interesting learning.

- Be able to download the lego set image from the stored URL.

- Be able to schedule this image download function.

- When the function runs, it will check the database for any sets that do not yet have the image downloaded and then add them to a queue to be processed. Note: You should know by now that I am not writing production code here and thus the design choice I am making here is to not worry about what will happen if images are being downloaded and another scheduling job was kicked off. Also I don’t care if an image can never be downloaded. Possible solutions would be to track state and have a failed counter to know when to just give up forever on a set.

- Send out an email on each successful database update.

Computer, resume program

I have rebooted since I last played around with faasd and thus the instance need to be started again.

P.S. Do not just run "multipass start" because it will create a new instance called primary.

$ multipass start faasd

$ faas-cli list

Function Invocations Replicas

legodb 0 0

nodeinfo 0 0

I also had to start the MariaDB instance on AWS. Kept getting a timeout while invoking my function and then I remembered that I stopped the database on RDS.

Speeding up development time

At the moment I make a change to the python code and then run faas-cli up to build and deploy and then invoke the function only to discover something blew up. This is not ideal. Really you would first do most of your development locally and only deploy as OpenFaaS once you have a good chunk working.

To help me in this I created a file called indev.py that I use to develop/explore code locally. indev stands for In Development and is a term I have used for a good 20 years for this kind of temporary / boot strap code (when not using TDD).

from legodb.handler import handle

from pprint import pprint

class Event:

def __init__(self):

self.body = None

self.headers = None

self.method = 'GET'

self.query = None

self.path = '/legosets'

class Context:

def __init__(self):

self.hostname = 'localhost'

if __name__ == "__main__":

event = Event()

context = Context()

response = handle(event, context)

print(f'{event.path}')

pprint(response)

print('')

I will need the secrets locally so I have created the 3 secrets required at: /var/openfaas/secrets/

$ sudo mkdir -p /var/openfaas/secrets/

$ sudo chown -R andre:staff /var/openfaas

# Then created the files (I actually copied this directly from my faasd instance

database-host database-password database-user

Shiny object distraction

At first I was going to use alembic to manage database migrations from SQLAlchemy but I ran into import issues that was just eating up to much of my time. So I will revisit using alembic another time.

Learning action point: Importing modules and files in Python has always been a bit of black magic to me. I need to spend some time to really understand how the import process works.

Initially I was just going to store the image data as blob in the database, but this is never a good idea and even this little learning/toy project it just wouldn’t feel correct. So after a bit of googling it seemed that one option would be to use SQLAlchemy-ImageAttach. But this also turned out to be more of time sinkhole than me actually learning the bits I wanted to learn about.

So instead I will just have a column in the table to keep reference to where the image lives on disk after it has been downloaded. Simples.

Altering the Database

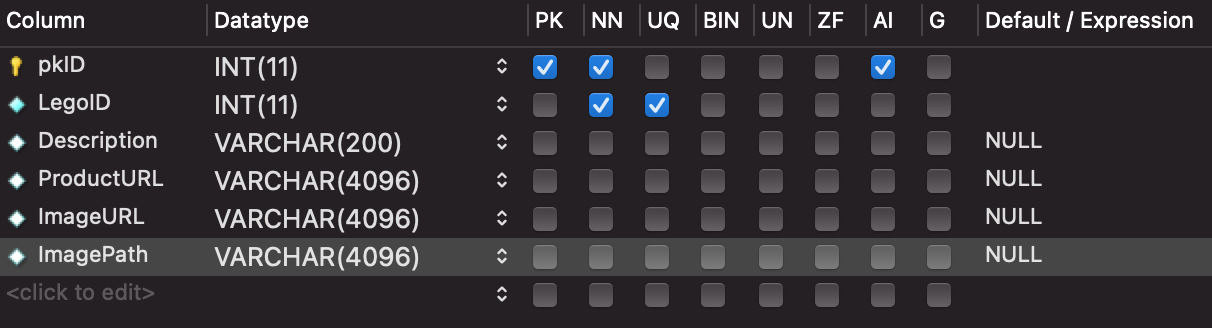

I will be altering the table directly in MySQLWorkbench and just update the models.py file.

Add a new column called ImagePath as VARCHAR(4096) which is the max path length on Linux.

ALTER TABLE `openfaasdb`.`legosets`

ADD COLUMN `ImagePath` VARCHAR(4096) NULL AFTER `ImageURL`;

Updated the models.py file and ran the local test (python indev.py)

class LegoSet(Base):

__tablename__ = 'legosets'

id = Column('pkID', Integer, primary_key=True, nullable=False)

legoID = Column('LegoID', Integer, unique=True, nullable=False)

description = Column('Description',String(200))

productURL = Column('ProductURL',String(4096))

imageURL = Column('ImageURL',String(4096))

imagePath = Column('ImagePath',String(4096))

Get the list of Lego sets that require an image download

The scheduled function will need a list of the Lego sets that still require the image to be downloaded.

The query will simply just return Lego sets that have an ImageURL set and that does not have an ImagePath set (returning max number specified by the limit parameter). For now we don’t care if the image is already in process of being downloaded or if the image doesn’t exist and thus we need to just give up after X retries.

# models.py

from sqlalchemy import and_

...

def get_legosets_that_need_an_image_download(session, limit=10):

legosets = session.query(LegoSet).filter(

and_(

LegoSet.imageURL.is_not(None),

LegoSet.imagePath.is_(None)

)

).limit(limit)

return legosets

To test that this query returns the expected sets I added some dummy data.

INSERT INTO `openfaasdb`.`legosets` (`LegoID`, `Description`, `ProductURL`) VALUES ('6000', 'Does not have an ImageURL', 'Blah');

INSERT INTO `openfaasdb`.`legosets` (`LegoID`, `Description`, `ProductURL`, `ImageURL`, `ImagePath`) VALUES ('6001', 'Does have both', 'Blah', 'blah', 'blah');

INSERT INTO `openfaasdb`.`legosets` (`LegoID`, `Description`, `ProductURL`, `ImagePath`) VALUES ('6002', 'Have path but not image url', 'Blah', 'blah');

Adding a new API end point that the scheduler will use to download images

The cron scheduler will be invoking a PUT to /legosets-download-images. This function will get the list of Lego sets that require images and will then use a task queue to do the actual work. The response will simply be the Lego ID and the Image URL that will be processed next.

… and yes I know this is not really RESTful.

# handler.py

# Added to the hacked router

elif event.method == 'PUT':

if event.path == '/legosets-download-images':

response = download_legoset_images()

...

def download_legoset_images():

session = create_database_session()

legosets = get_legosets_that_need_an_image_download(session, limit=2)

result = []

for legoset in legosets:

result.append({

'legoID': legoset.legoID,

'imageURL': legoset.imageURL

})

session.close()

return {"sets": result}

Tested locally

$ python indev.py

/legosets-download-images

{'sets': [{'imageURL': 'https://live.staticflickr.com/65535/49698377257_be3f773feb_b.jpg',

'legoID': 21322},

{'imageURL': 'https://images.brickset.com/sets/AdditionalImages/21325-1/21325-1_95850.jpg',

'legoID': 21325}]}

Next actions

Tomorrow I will start exploring a task queue. I have used Celery in the past but recently I learned about Redis Queue so I am keen to explore this.