Photo by the blowup on Unsplash

Here is my Log book

Git repository for the functions.

Build in public, Fail in public and Win in public.

My development plan is was as follows:

- The API endpoint

/legosets-download-imageswill be scheduled to run every few minutes. - It will find the next N Lego sets in the database that have no cached image on disk yet.

- These will be added to the task queue to go and download the image at the specified Image URL.

- Once the image has been downloaded then the database will be updated and an email task will be created. Which in turn will send an email to notify me that the image has been processed.

At least that was the plan!

Update: Nothing is wrong with OpenFaaS, faasd, RQ or any of the other tech used here. They are all amazing projects and I highly recommend you try them out. Personally I prototyped an idea and learned where I went wrong, it now comes to a point where I have to be brutal and honest about my priorities and time available to work on them. The project is shelved for now until I come back to build version 2 (I already know this will bug me until I fix it).

I ran into issues of setting up the RQ worker on the faasd running instance (multipass). Long story short, the task worker needs access to the Python source code.

I also ran into issues of the module not loading correctly that serves the task.

In the end I thought I would just run the RQ worker still on my development machine so that I can crack on with implementing the rest.

That did actually work! I had this crazy setup of an OpenFaaS function running on the faasd instance that when invoked would spawn tasks on a Redis queue for the images that need downloading. The worker was then running on my local machine and would pick up the download tasks to be performed.

However where this all fell apart is the RQ worker needs to run in the correct directory for the task module to be loaded correctly, but even more importantly it meant that the actual caching of images would happen on the task worker machine and this is not where I wanted it to happen.

Calling it quits and a failure. I accomplished most of what I wanted to achieve from learning more about OpenFaaS and faasd. In order to solve this last "feature"’s issues would mean I would have to sink a lot more time into redesigning, development, testing, deployment and troubleshooting just so I can tick a self imposed box. I simply just do not have the time at the moment for this. On the other hand I have learned an absolute ton in the past 22 days.

Round 2 idea?

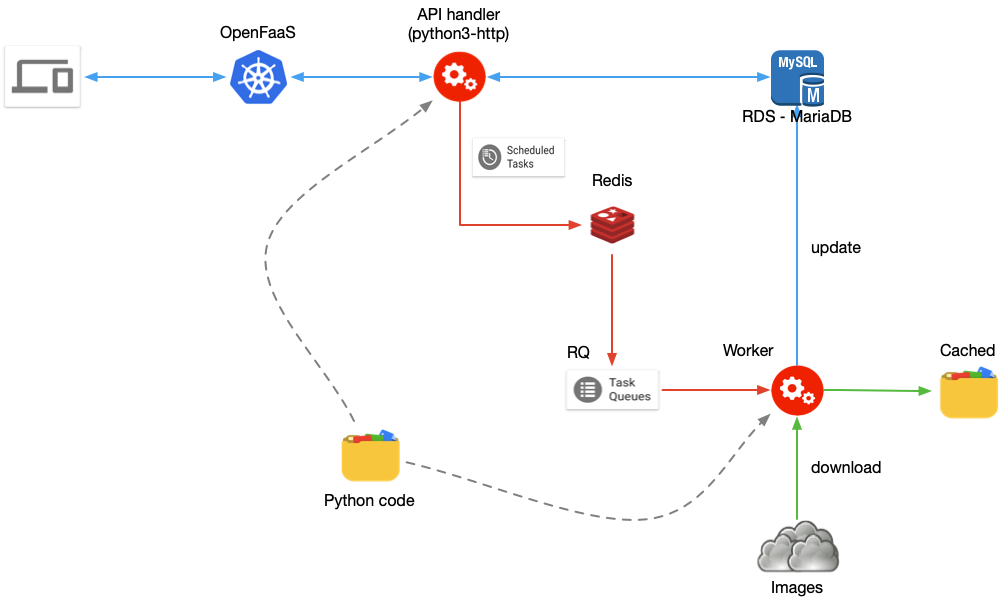

Update: After writing this post yesterday, I wanted to diagram how I think the correct solution should be laid out (at least at a very high level concept).

Originally this learning project just grew organically and I just threw things in the mix as I went along. However in order to fix the issues I currently face, would mean I need to plan up front how services will be deployed etc. The diagram above will serve as my reference point when I come back for round 2.

faas-cli up failed because of disk space

To add to my insult, I tried to redeploy the latest code of the function and encountered this error.

Unexpected status: 500, message: unable to pull image docker.io/andrejacobs42/legodb:latest: cannot pull: mkdir /var/lib/containerd/io.containerd.content.v1.content/ingest/808e55d533faf2f2ed3943adb2abec19815d0ee8af6efb140d284ad7459a631a: no space left on device: unknown

unable to create container: legodb, error: failed to create prepare snapshot dir: mkdir /var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/new-798458060/fs: no space left on device: unknown

I will have to spend some time trying to free up some space on the VM. Looks like it was only created using 4.7GB for space.

Ignore the rest that follows

The content that follows below is part of my journey trying to make sense of how things work and would connect and ultimately I would need to redesign how this all fits together.

Testing email still works

On Day 5 of my journey I setup sendmail on the instance. It has been a while since I used it so it is best to confirm it still works.

# SSH into the faasd instance

$ ssh -i ~/.ssh/id_rsa -o PreferredAuthentications=publickey ubuntu@192.168.64.4

# Send a test email

ubuntu@faasd:~$ echo "Hello world!" | sendmail -v email@address.com

# It worked!

Added a new secret that specifies the email address to which notifications from the function will be sent.

# Copy the email address on which you want to receive notifications

# Then create the faasd secret

$ pbpaste | faas-cli secret create notification-email

# Reminder: The secrets are stored on the faasd instance at:

# /var/lib/faasd-provider/secrets

Setting up the RQ worker on the faasd instance

I am going to be lazy here and just install the RQ worker directly on the Ubuntu instance (multipass) that is running faasd etc.

Why? Mainly because I want to get this learning project finished and secondly because I currently lack the knowledge of how to set this up in the best manner. My guess is something like cloud-init could take care of all of this.

Install dependencies including RQ.

# On the faasd instance (multipass vm)

ubuntu@faasd:~$ pwd

/home/ubuntu

ubuntu@faasd:~$ python3 --version

Python 3.8.5

# Install pip and venv

ubuntu@faasd:~$ sudo apt install python3-pip

ubuntu@faasd:~$ pip3 --version

pip 20.0.2 from /usr/lib/python3/dist-packages/pip (python 3.8)

ubuntu@faasd:~$ sudo apt install python3-venv

# Create a new virtual environment

ubuntu@faasd:~$ mkdir -p deps

ubuntu@faasd:~$ cd deps

ubuntu@faasd:~/deps$ python3 -m venv ./venv

ubuntu@faasd:~/deps$ source venv/bin/activate

(venv) ubuntu@faasd:~/deps$

# Install RQ

(venv) ubuntu@faasd:~/deps$ pip3 install rq

...

Installing collected packages: redis, click, rq

Successfully installed click-7.1.2 redis-3.5.3 rq-1.7.0

# Freeze requirements

(venv) ubuntu@faasd:~/deps$ pip3 freeze > requirements.txt

(venv) ubuntu@faasd:~/deps$ cat requirements.txt

click==7.1.2

redis==3.5.3

rq==1.7.0

Start a worker. The function’s legodb.yml will be using a queue name of "queue-name: everything-is-awesome" and redis URL "redis-url: redis://10.62.0.1:9988/0"

(venv) ubuntu@faasd:~/deps$ rq worker -u 'redis://10.62.0.1:9988/0' everything-is-awesome

11:20:56 Worker rq:worker:110ee88ebe134e14a707e64de4c34ef1: started, version 1.7.0

11:20:56 Subscribing to channel rq:pubsub:110ee88ebe134e14a707e64de4c34ef1

11:20:56 *** Listening on everything-is-awesome...

11:20:56 Cleaning registries for queue: everything-is-awesome

Deploy the latest version of the function and test

$ ./up.sh

$ curl -X PUT http://192.168.64.4:9999/function/legodb/legosets-download-images

# This worked

# However checking the output from the RQ worker

...

ModuleNotFoundError: No module named 'function'

Something I kept wondering about while playing around with RQ was how did it know how to run the actual Python code. My assumption was that there was some clever thing going on when you enqueued a task that some how the code got reflected, serialized, stored in Redis and then the worker would pick up a task, deserialize the Python and invoke it. But how would dependencies etc. work?

Lol, I should have known it is not going to be that complex or clever. I am actually glad it turns out to be the most easy to explain scenario.

# On my local Mac where RQ worker worked no issues

$ cd ~/some-other-dir-where-the-lego-code-does-not-exist

# Spin up the worker

$ rq worker -u 'redis://192.168.64.4:9988/0' johnny5

# Run the testing code that adds a task ...

ModuleNotFoundError: No module named 'legodb'

Ok that confirms it, the RQ worker will need to have access to the same source code in order to run the tasks.

Right, so now I have the issue that I would have to deploy the same code as what is being used by my function to also be available where ever the RQ worker(s) live and keep them in sync.

This is way to much of a **** ache for what I want to accomplish in this learning project. So instead I am going to cop out and just run the worker on my local development setup.

However this still achieves my overall goal, and that is to get out of my comfort zone and learn new things.

Although this feels like a big fail, it is win at the same time because I have made some key discoveries here.

- faasd uses containerd (not actually Docker)

- docker-compose.yaml spins up containers used by OpenFaaS sub components

- Your functions access services using the IP 10.62.0.1 and the "external" port

- RQ workers need access to the Python code that they will need to run

Troubleshooting the Redis connection

TL;DR; The port turned out to be 9988 for the function to connect to Redis as well.

Conclusion: The "external" port specified in docker-compose.yaml is also the port that your functions will need to use. For example my redis setup stated this "9988:6379". The external port is 9988 which is exposed not only out to the local network but also to other containers (where the functions are running).

Of course! All that docker-compose.yaml is doing is spinning up a different container for Redis and this is not the same container in which the function will be running.

Below is a journey I took in trying to figure this out.

$ curl -X PUT http://192.168.64.4:9999/function/legodb/legosets-download-images

...

<title>500 Internal Server Error</title>

$ faas-cli logs legodb

...

2021-03-29T09:10:41Z 2021/03/29 09:10:41 stderr: raise ConnectionError(self._error_message(e))

2021-03-29T09:10:41Z 2021/03/29 09:10:41 stderr: redis.exceptions.ConnectionError: Error 111 connecting to 10.62.0.1:6379. Connection refused.

Looks like the function can’t make a connection to the Redis service at "10.62.0.1:6379".

Time to hit the books and google again.

Is the IP addresses correct?

# On the faasd instance (multipass vm)

ubuntu@faasd:~$ ifconfig

...

enp0s2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.64.4 netmask 255.255.255.0 broadcast 192.168.64.255

...

openfaas0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.62.0.1 netmask 255.255.0.0 broadcast 10.62.255.255

Ok the IP address on my local network is correct as well as the openfaas0 bridge. According to the faasd documentation and ebook the IP 10.62.0.1 is the one exposed to the functions and all the core services can be reached by that IP address.

Let me check the Redis setup.

ubuntu@faasd:~$ sudo nano /var/lib/faasd/docker-compose.yaml

...

redis:

ports:

- "9988:6379"

Ok I know that Redis can be reached from my Mac on port 9988. Perhaps 9988 should also be used by the function. My assumption here was that 6379 is the internal port on 10.62.0.1.

Instead of just changing the environment variable and redeploying the function I want to first see if I can explore this a bit more.

# Local Mac

# It is handy to deploy the nodeinfo function on faasd and keep it around

$ echo verbose | faas-cli invoke nodeinfo

...

eth1: [

{

address: '10.62.0.28',

netmask: '255.255.0.0',

family: 'IPv4',

mac: '52:9c:57:20:b7:08',

internal: false,

cidr: '10.62.0.28/16'

},

# Ok looks like 10.62.0.28 is the IP address used by the container running the nodeinfo function.

# On the faasd instance

ubuntu@faasd:~$ netstat -tuna

# Install netstat using: sudo apt install net-tools

...

tcp 0 0 10.62.0.1:47556 10.62.0.26:6379 ESTABLISHED

tcp 0 0 10.62.0.1:47554 10.62.0.26:6379 ESTABLISHED

tcp6 0 0 :::9988 :::* LISTEN

tcp6 0 0 192.168.64.4:9988 192.168.64.1:57559 ESTABLISHED

Ok so port 6379 is being used by 10.62.0.26 and I am going to guess that it is the Redis container.

Checking logs

# Check the logs of a core service (or anything you added to docker-compose.yml)

ubuntu@faasd:~$ journalctl -t openfaas:redis

# Let's see what happens on the gateway when we invoke our function

ubuntu@faasd:~$ journalctl -f -t openfaas:gateway

Mar 29 10:40:37 faasd openfaas:gateway[16070]: 2021/03/29 09:40:37 GetReplicas [legodb.openfaas-fn] took: 0.032137s

Mar 29 10:40:37 faasd openfaas:gateway[16070]: 2021/03/29 09:40:37 GetReplicas [legodb.openfaas-fn] took: 0.032228s

Mar 29 10:40:37 faasd openfaas:gateway[16070]: 2021/03/29 09:40:37 Forwarded [PUT] to /function/legodb/legosets-download-images - [500] - 0.402113s seconds

Oh what the heck lets just change the port in our environment variable and redeploy.

Voila! that worked. If you made it this far, read the TL;DR section ^^ where I make the obvious connection 🤦♂️