Photo by Eden Constantino on Unsplash

Here is my Log book

Git repository for the functions.

The next part in my Lego database learning project will be to schedule tasks that will go and download the Images. In order to do this I will need a task queue system.

In the past I have used Celery but I came across RQ (Redis Queue) while reading the Flask Mega Tutorial and decided since I like using Redis this will be a natural fit.

Redis is an in-memory data structure store, used as a database, cache, and message broker.

Installing Redis on faasd instance

As luck would have it, the book Serverless for Everyone Else covers how to install redis directly on your faasd instance.

Learning action point: Ideally all the extra services will be installed and configured from a single config file and not needing me to manually do it. I am guessing cloud-init can take care of that.

SSH into the faasd instance.

# SSH into the faasd instance

$ ssh -i ~/.ssh/id_rsa -o PreferredAuthentications=publickey ubuntu@192.168.64.4

...

ubuntu@faasd:~$

Create the directory in which the Redis data will be stored.

ubuntu@faasd:~$ sudo mkdir -p /var/lib/faasd/redis/data

ubuntu@faasd:~$ sudo chown -R 1000:1000 /var/lib/faasd/redis/data

# Confirm

ubuntu@faasd:~$ sudo ls -la /var/lib/faasd/redis

total 12

drwxr-xr-x 3 root root 4096 Mar 27 20:05 .

drw-r--r-- 4 root root 4096 Mar 27 20:05 ..

drwxr-xr-x 2 ubuntu ubuntu 4096 Mar 27 20:05 data

Who is user and group with ID 1000?

ubuntu@faasd:~$ whoami

ubuntu

ubuntu@faasd:~$ id

uid=1000(ubuntu) gid=1000(ubuntu) groups=1000(ubuntu),4(adm),20(dialout),24(cdrom),25(floppy),27(sudo),29(audio),30(dip),44(video),46(plugdev),117(netdev),118(lxd)

ubuntu@faasd:~$ id root

uid=0(root) gid=0(root) groups=0(root)

# The default user 'ubuntu' has user and group ID of 1000

Add the Redis server to be installed and managed by faasd.

ubuntu@faasd:~$ sudo nano /var/lib/faasd/docker-compose.yaml

# Add this to the file

redis:

image: docker.io/library/redis:6.2.1-alpine

volumes:

- type: bind

source: ./redis/data

target: /data

cap_add:

- CAP_NET_RAW

entrypoint: /usr/local/bin/redis-server --appendonly yes

user: "1000"

ports:

- "9988:6379"

I will be exposing the Redis server on port 9988 from the faasd instance (the multipass VM).

Restart faasd and verify

ubuntu@faasd:~$ sudo systemctl daemon-reload && sudo systemctl restart faasd

# Tail (follow) the redis logs

ubuntu@faasd:~$ sudo journalctl -f -t openfaas:redis

# On my Mac I checked if the faasd VM port is exposed

$ nmap -Pn -p9988 192.168.64.4

...

PORT STATE SERVICE

9988/tcp open nsesrvr

Installing Python RQ (Redis Queue)

I will first be installing RQ locally and testing it from my Mac before adding to the OpenFaaS project.

$ cd learn-openfaas/legodb

$ source legodb/venv/bin/activate

(venv)$ pip install rq

(venv)$ pip freeze > legodb/requirements.txt

I have one terminal window open to monitor the Redis server logs.

ubuntu@faasd:~$ sudo journalctl -f -t openfaas:redis

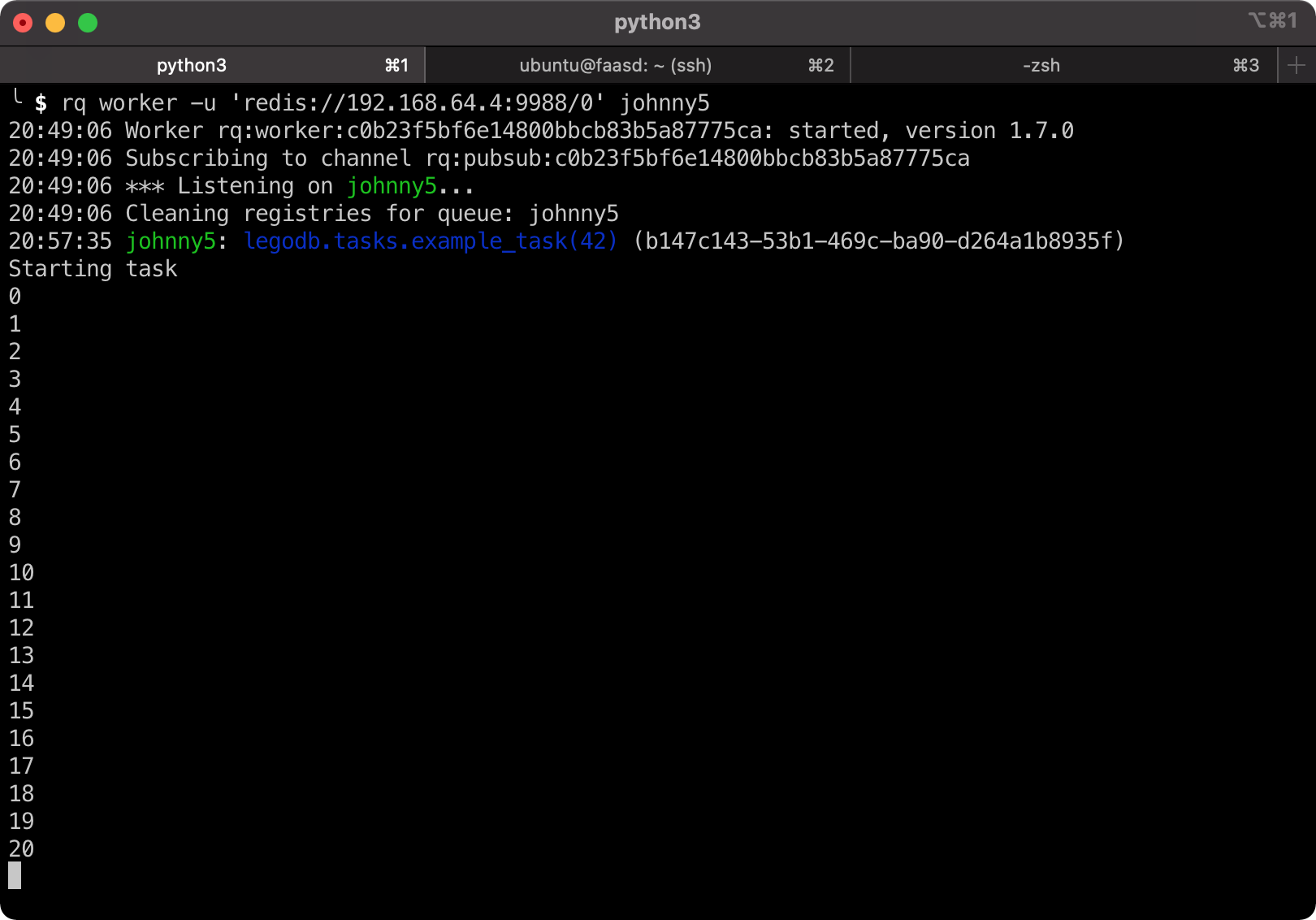

Create a worker

We will need to start a worker that will accept tasks to be processed.

# Need to figure out how to connect to the Redis server (and not just localhost)

(venv)$ rq worker --help

...

-u, --url TEXT URL describing Redis connection details.

# Ok lets spin up a worker

(venv)$ rq worker -u 'redis://192.168.64.4:9988/0' johnny5

20:49:06 Worker rq:worker:c0b23f5bf6e14800bbcb83b5a87775ca: started, version 1.7.0

20:49:06 Subscribing to channel rq:pubsub:c0b23f5bf6e14800bbcb83b5a87775ca

20:49:06 *** Listening on johnny5...

20:49:06 Cleaning registries for queue: johnny5

Create a task and test it works

Add a new file named tasks.py in the same directory where handler.py lives. To test that Redis and RQ works, I will be using the example from Flask Mega Tutorial.

# tasks.py

import time

def example_task(seconds):

print('Starting task')

for i in range(seconds):

print(i)

time.sleep(1)

print('Task completed')

I modified my indev.py file and ran it

# indev.py

from redis import Redis

import rq

if __name__ == "__main__":

# Test RQ and Redis is working

queue = rq.Queue('johnny5', connection=Redis.from_url('redis://192.168.64.4:9988/0'))

job = queue.enqueue('legodb.tasks.example_task', 42)

print(job.get_id())

(venv)$ python indev.py

b147c143-53b1-469c-ba90-d264a1b8935f

# ^^ the job.get_id()

The output from the worker

20:57:35 johnny5: legodb.tasks.example_task(42) (b147c143-53b1-469c-ba90-d264a1b8935f)

Starting task

0

1

2

...

41

Task completed

20:58:17 johnny5: Job OK (b147c143-53b1-469c-ba90-d264a1b8935f)

20:58:17 Result is kept for 500 seconds

Nice one! We have a worker running locally that can process tasks.

Is this really using the faasd Redis server?

I noticed that monitoring the openfaas:redis logs had nothing about the worker or tasks and thus I was wondering if this is actually working the way I think it was. So I decided to do some testing.

# Stop faasd

ubuntu@faasd:~$ sudo systemctl stop faasd

Not long after stopping faasd, the RQ worker logged the following.

...

21:06:52 Worker rq:worker:c0b23f5bf6e14800bbcb83b5a87775ca: found an unhandled exception, quitting...

...

redis.exceptions.ConnectionError: Connection closed by server.

Error 61 connecting to 192.168.64.4:9988. Connection refused.

Ok so the worker is indeed using Redis from our faasd instance. It also did stop running.

Started it all up again and did another test run.

ubuntu@faasd:~$ sudo systemctl start faasd

(venv)$ rq worker -u 'redis://192.168.64.4:9988/0' johnny5

...

21:16:37 *** Listening on johnny5...

21:17:24 johnny5: legodb.tasks.example_task(42) (d65a042d-b188-4f70-878e-c132cfb34b6c)

Starting task

...

What happens when the task does not exist? I changed the name being requested to "legodb.tasks.example_task_fail" and ran it.

(venv)$ python indev.py

8a093e7a-a6d6-4746-98d1-9bdec7cce074

# ^^ Interestingly we did get a job id

# Worker output

21:18:11 johnny5: legodb.tasks.example_task_fail(42) (8a093e7a-a6d6-4746-98d1-9bdec7cce074)

... throws a big exception

AttributeError: module 'legodb.tasks' has no attribute 'example_task_fail'

Ok at least the worker didn’t just quit.

What happens when there is no worker with the specified name? I changed the name being requested to "johnny5_fail" and ran it.

(venv)$ python indev.py

3a50f84f-47db-400e-8b6a-41ab5d8c2bd5

Ok this is interesting. So there is no worker with that specified name but we did schedule the job to be run. My guess is the task is waiting in Redis until a worker with that name shows up for work.

Let’s clock that worker bee in.

(venv)$ rq worker -u 'redis://192.168.64.4:9988/0' johnny5_fail

...

21:25:09 johnny5_fail: legodb.tasks.example_task(42) (3a50f84f-47db-400e-8b6a-41ab5d8c2bd5)

Starting task

0

1

...

Nice! Ok so you can schedule tasks even if no worker is running to pick up the tasks.

Next actions

Tomorrow I will start hooking this up to my OpenFaaS function and also see how we can start workers on the faasd instance reliably.